What are

/r/realAMD's

favorite Products & Services?

From 3.5 billion Reddit comments

The most popular Products mentioned in /r/realAMD:

The most popular Services mentioned in /r/realAMD:

SlideShare

HWiNFO (32/64)

Speedtest by Ookla

Shadow

RawTherapee

Driver Store Explorer

The Motley Fool

Slickdeals

The most popular reviews in /r/realAMD:

> The crux of his/her thinking was that Intel will likely release a 'ready to go' 10nm architecture in 2019 for the first time. I think this is entirely possible. I cannot say what the performance uplift will be vs. 14nm, but I think it'll be pretty notable. Smaller process, more power efficient, more space per die to pack in transistors. All the usual stuff. There will be nothing majorly different from an architecture standpoint about it, but that was to be expected.

Entirely possible, even if it's hard not to be cynical about 10nm at this point. But Intel don't rate 10nm or even 10nm+ transistor performance above that of 14nm++, which leaves performance increases at adding cores, changing the architecture, or adding cache. At least on the desktop, since laptop and server options tend to be power limited rather than transistor performance limited and 10nm will provide more headroom there.

Pretty sure that's just the physical layout. Slide 50 here explicitly shows 8 IF links on the IO die, and it would complicate the chiplets to have to switch data rather than firing it all at the IO die.

To be sure, nVidia listed 21 'RTX games coming soon' on slide 51 of their introduction presentation.

Slide 19 is where the problem is: you have a ginormous (read: extremely expensive to make) 754mm^2 die (2080Ti) of which over 1/3 is devoted to raytracing bits of a scene, and 1/3 of what remains is tensor cores whose job is to make it look like it did more raytracing than was actually done. So only half the die is doing work in legacy situations, with all the cost of a big die. I'm viewing it for now as a tech demo.

Clearly even this hybrid approach isn't going to be affordable until 7nm matures enough to allow large dies. Doing true 4k 60fps raytracing though is going to require an order of magnitude more transistors, and given the rate at which manufacturers disappear with each node step it's not clear to me that anyone's going to be able to afford a foundry capable of putting sufficient transistors on a chip. So nVidia's approach of do some real raytracing, AI the rest could conceivably be the only one we ever get at an affordable price.

I have not overclocked or played with voltages for a long time. But there are still programmes out there that run tests on your hardware. For a CPU try intel burn test. It will run your cpu at a high level and report any errors. You can also use hwinfo to verify any voltages used. This will tell you if the CPU is running as it should, and also let you know how much voltage is being used during the process.

If you want to be clever you can use an intel burn test as a metric to give an indicator of the CPU working as it should. Then gradually reduce your voltage until you see a drop off. This is how you would go about undervolting without any loss of speed. Most CPUs are ran at a higher than necassary voltage, which is designed to cater for all bins. Most of the time you can reign that voltage back, sometimes quite a bit.

My upgrade from Ryzen 7 1800X to Ryzen 9 3950X was similarly trouble-free, but also involved replacing the liquid cooler with an AMD-recommended 280mm radiator (went from H100i v2 to H115i RGB Platinum).

But things went wrong elsewhere when it turns out the existing G.SKILL memory modules had failed, returning errors in a localized memory region at speeds that were previously known to work properly on the old processor (DDR4-2933 14-16-16-36). I ended up having to run to the local Best Buy to grab some replacement modules (Corsair Vengeance RGB Pro CMW32GX4M2C3200C16, 2x16GB, DDR4-3200 16-18-18-36). Astaroth started right up and ran the memory modules at rated speed with no hesitation at all, and managed to complete one full pass of Memtest86+ without any errors. (I'll be sending the faulty modules to G.SKILL for replacement under warranty once I get a chance.)

This upgrade opened up new possibilities on the Demon (as I like to call it). Assassin's Creed Odyssey, which often stuttered in complex scenes because of a processor bottleneck on the Ryzen 7 1800X (it'll happily max out 16 hardware threads), now runs consistently smoothly. I can even do high-quality CPU-based recording at a full 1440p and 60 fps with only a limited impact on performance, something I could never do on the Ryzen 7 1800X. Batch-processing 24-megapixel RAW images in RawTherapee (an open-source alternative to Lightroom; I used to be a sports photographer for my college) now takes half the time, processing each photo in less than five seconds.

As for benchmarks... Cinebench R20 scores were as high as 9507 with Precision Boost Overdrive enabled, 9335 at stock. I haven't spent huge amounts of time benching this thing beyond this and the RawTherapee test, but anything that can make use of all these cores simply gets destroyed by this monster of a processor.

Astaroth unchained. 'Nuff said.

> You ignored the part where updating the console's firmware is separate from updating the game.

Your argument was, if I never go online they cannot assert any control. I can just continue to play my games offline.

My argument is, new games that gamers actually want to play require them to take the console online to get the latest firmware, thus allowing the companies to once again assert control over their gaming experience by altering the software.

I didn't ignore it, you just didn't understand what I was saying. You keep talking about control, I am trying to show you how these companies continually assert their control.

> The PC market is not a small market. It doesn't only include people who play on a high-end CPU + GPU from the last year or two. Plenty of gamers exist who play on like shitty integrated graphics and a slow dual core CPU from 10 years ago.

Of course it's not a small market, go back and read my first post. I said the market is huge and consistently growing. You keep wanting to argue with me for some reason but all I'm saying is the casual crowed accept consoles and all their locked down nature in exchange for a cheap and convenient way to play games and they will accept streaming too.

I mean you know there are successful businesses doing game streaming already. https://shadow.tech is one of them and they're doing good business.

We also have to consider the publishers angle. We already have console exclusives and people do buy specific consoles to gain access to those exclusive games. I fully expect streaming to gain exclusives too, it will result in zero piracy for publishers.

This is what bugs me the most about how Google is pitching this.

Bandwidth is only half of the equation.

You could have a 500Mbps up/down connection but if your ping to the server is 100ms+ its still going to be a bad time.

As an example, here in NZ we actually have pretty decent internet with an average of 87Mbps down and 52Mpbs up (https://www.speedtest.net/global-index/new-zealand)

Well within the recommended bandwidth for Stadia. The catch is, most of the big servers are located in Australian data centers and even with a fiber connection you are looking at around 30ms to ping a data center in Sydney from Auckland.

30ms is borderline (I believe 20ms or lower is the current recommendation from people like Parsec) so the gaming experience will be questionable at best.

> I mean the motherboard's manufacturer, not acer.

Hey there, any idea where I could get this, and the best way to test?

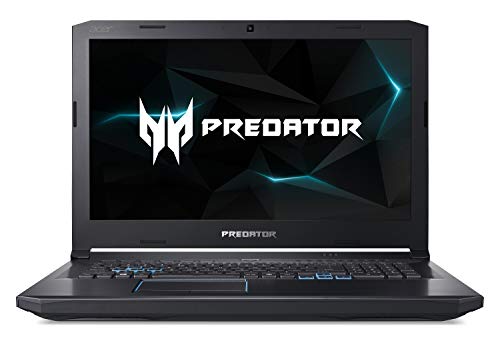

This is the laptop: https://www.amazon.com/Acer-Predator-PH517-61-R0GX-Processor-Graphics/dp/B07GWX5X26

Also, Ryzen 5 2600

$150 local

$160 shipped